Industrial IoT (IIoT) is entering a new phase. For years, value creation depended on moving data from machines to centralized cloud platforms. Today, latency constraints, bandwidth economics, security considerations, and operational continuity are reshaping how intelligence is deployed. Increasingly, decision-making is moving closer to where data is generated. Edge AI is intelligence embedded into operations, allowing systems to sense, decide, and act instantly without cloud dependency. It is no longer an experiment or a niche optimization. It represents the rise of real-time AI and AI at the edge in industrial systems that must operate continuously, safely, and at scale.

From Centralized Insight to Local Decisions

Traditional IIoT architectures were designed around centralized analytics:

- data aggregated in the cloud

- models updated periodically

- connectivity assumed to be stable

These assumptions remain effective for enterprise IT workloads. Industrial environments, however, operate under a different set of priorities. Factories, utilities, and remote assets function with:

- millisecond-level control-loop requirements

- intermittent or bandwidth-constrained connectivity

- strict uptime and safety expectations

Industry analyses indicate that over 70% of industrial data is never transmitted to the cloud, often because its value is time-sensitive or context-dependent. Edge AI enables this data to be processed locally through on-device AI and edge inference, supporting timely, situational decisions that align with the operational rhythm of machines and processes. A useful way to think about Edge AI is local decision intelligence: systems designed to sense, decide, and respond within the same physical and temporal context as the operation itself.

Where Edge AI Delivers Measurable Impact: Use Cases in Industrial IoT

Worker Safety and Operational Awareness:

One of the fastest-growing applications of Edge AI in industrial environments is worker safety, particularly where real-time response is critical. Edge-based computer vision is increasingly used for:

- PPE compliance (helmets, vests, gloves)

- restricted-area access (under cranes, forklift paths, hazardous zones)

- proximity alerts between workers and moving equipment

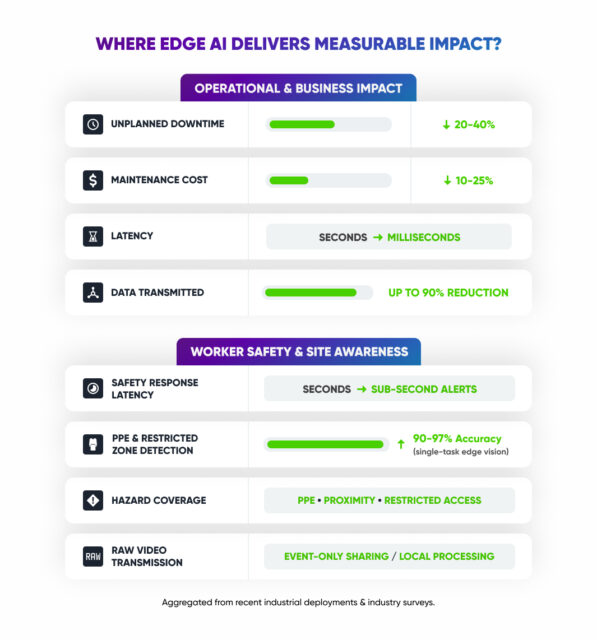

Recent research shows that lightweight vision models can achieve 90%+ accuracy for single safety tasks when deployed on resource-constrained edge devices. Processing video locally enables sub-second alerts, which is essential in dynamic, high-risk environments where cloud latency is impractical.

At the same time, studies highlight the importance of system-level design. As safety scenarios become more complex, multiple workers, overlapping hazards, changing environments, edge deployments must balance model efficiency, power consumption, and robustness over time. This has positioned Edge AI not just as a detection tool, but as part of a broader real-time safety infrastructure.

Predictive Maintenance (PdM):

Predictive maintenance has always been local-first. High-frequency vibration, temperature, and acoustic signals are typically processed at or near the asset, because continuously moving this data to the cloud is neither practical nor economical. The limitation of many traditional PdM systems is not where they run, but how they reason. Rule-based controllers and static thresholds struggle to adapt as equipment behavior evolves, operating conditions change, or failure patterns become more complex. Edge AI upgrades this local processing model by enabling continuous, data-driven pattern recognition directly at the asset without requiring raw data to be streamed upstream for deeper analysis.

Industrial deployments report:

- 20–40% reduction in unplanned downtime

- 10–25% lower maintenance costs

When AI-driven inference runs locally. Edge AI makes it possible to extract more value from large volumes of sensor data at the source, delivering more intelligent detection with a fraction of the cost and latency of cloud-centric approaches.

Visual Quality Inspection:

Computer vision workloads running as real-time AI reduce latency from seconds to milliseconds.

- inline defect detection

- high-speed production lines

- safety-critical inspections

Edge deployment allows inspection systems to operate consistently even when connectivity is limited, while keeping sensitive visual data on-site.

Behavioral and Event-Based Analytics

Beyond individual detections, Edge AI is increasingly used for behavioral and situational awareness, such as:

- loitering or trespassing detection in restricted zones

- unsafe movement patterns near heavy machinery

- early indicators of escalating risk based on motion and proximity

Processing these signals locally using on-device AI enables real-time intervention while reducing the need to stream continuous video feeds. Instead, edge systems transmit events and summaries, not raw footage helping organizations manage bandwidth and privacy constraints.

Bandwidth and Cost Efficiency

Rather than streaming raw sensor or video data, industrial edge systems transmit only relevant events or summaries. In manufacturing, logistics, and safety monitoring environments, this approach has reduced network usage by up to 90%, improving scalability while lowering operating costs.

Why Lab Tested Edge AI fails at Enterprise Scale

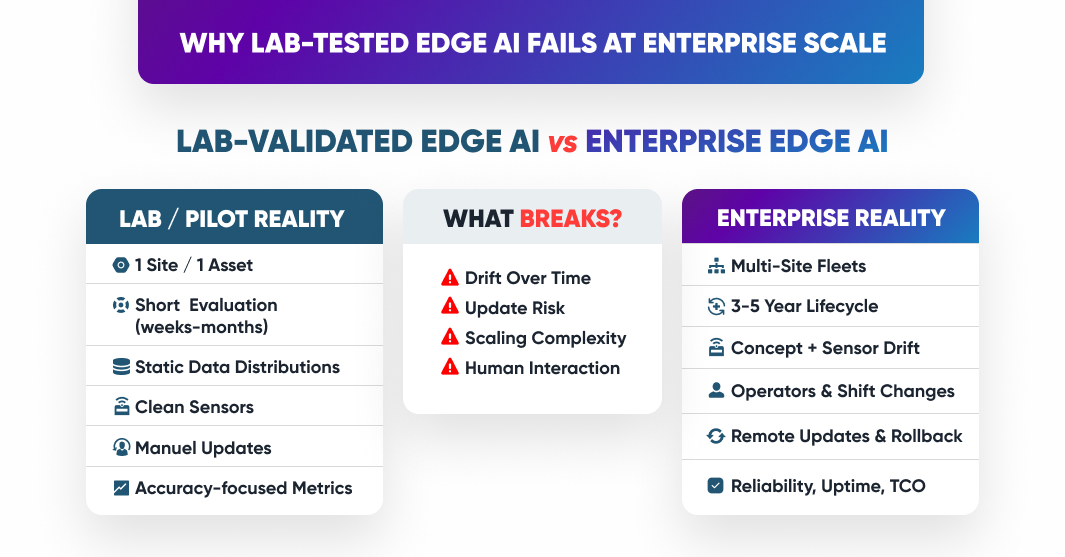

Despite progress, much of the Edge AI literature still relies on:

- single-site pilots

- short evaluation windows

- controlled environments

This hides the hardest problems.

What actually breaks Edge AI in production:

- Concept drift: equipment ages, processes change

- Sensor drift: calibration shifts over months or years

- Operational variance: different operators, shifts, and maintenance practices

- Lifecycle friction: updates, rollbacks, and monitoring at scale

In enterprise environments, success is not defined by peak accuracy. It is defined by predictable behavior over time.

The Architecture Shift: From Models to Systems

Edge AI is forcing a rethink of system design. Instead of optimizing components independently, leading deployments focus on cross-layer co-design:

- hardware capabilities of industrial edge devices aligned with inference workloads

- runtimes that respect real-time constraints

- orchestration aware of process criticality, not just CPU usage

At scale, thousands of devices must be:

- provisioned remotely

- updated safely

- monitored continuously

- recovered automatically

This is why edge-cloud collaboration and connectivity are becoming central topics not for performance alone, but for operational resilience.

Trust, Security, and Human Factors Matter More Than Ever

Industrial systems are socio-technical by nature. Operators must understand system behavior, trust alerts under pressure, intervene safely when needed.

Yet many Edge AI systems still treat:

- security

- explainability

- operational transparency

as secondary concerns. In practice, trust is built through consistency, not dashboards. Systems that recover cleanly from failure and behave predictably over time are the ones that get adopted.

Building Reliable Edge AI Systems in the Real World

At Sixfab, we see Edge AI not as a product category, but as a long-term systems challenge. The industry is moving past the question “Can we run AI at the edge?” The real question now is:

Can edge intelligence survive years of industrial reality drift, scale, people, and change?

Answering that requires:

- designing for lifecycle, not pilots

- validating systems over time, not just in testbeds

- treating reliability, updates, and recovery as first-class design goals

Edge AI is becoming the standard because industry demands systems that work where the world is messy, not where assumptions are clean. That shift is only just beginning and at Sixfab we build systems like ALPON Edge AI Platforms to accelerate it.